The code block displayed below contains an error. The code block should create DataFrame itemsAttributesDf which has columns itemId and attribute and lists every attribute from the attributes column in DataFrame itemsDf next to the itemId of the respective row in itemsDf. Find the error.

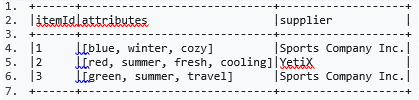

A sample of DataFrame itemsDf is below.

Code block:

itemsAttributesDf = itemsDf.explode("attributes").alias("attribute").select("attribute", "itemId")

- Since itemId is the index, it does not need to be an argument to the select() method.

- The alias() method needs to be called after the select() method.

- The explode() method expects a Column object rather than a string.

- explode() is not a method of DataFrame. explode() should be used inside the select() method instead.

- The split() method should be used inside the select() method instead of the explode() method.

Answer(s): D

Explanation:

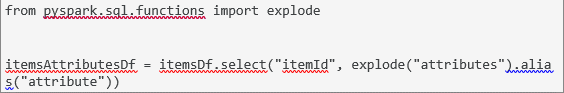

The correct code block looks like this:

Then, the first couple of rows of itemAttributesDf look like this:

explode() is not a method of DataFrame. explode() should be used inside the select() method instead.

This is correct.

The split() method should be used inside the select() method instead of the explode() method.

No, the split() method is used to split strings into parts. However, column attributs is an array of strings. In this case, the explode() method is appropriate.

Since itemId is the index, it does not need to be an argument to the select() method. No, itemId still needs to be selected, whether it is used as an index or not.

The explode() method expects a Column object rather than a string.

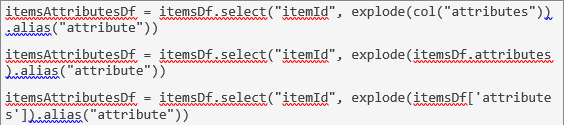

No, a string works just fine here. This being said, there are some valid alternatives to passing in a string:

The alias() method needs to be called after the select() method. No.

More info: pyspark.sql.functions.explode — PySpark 3.1.1 documentation (https://bit.ly/2QUZI1J) Static notebook | Dynamic notebook: See test 1, Question: 22 (

Databricks import instructions) (https://flrs.github.io/spark_practice_tests_code/#1/22.html , https://bit.ly/sparkpracticeexams_import_instructions)